Unifying Long Context LLMs and RAG

towards advances on long context LLMs and RAG

Background

Newly published LLMs have context windows larger and larger, i.e., GPT-4 turbo, a model with a 128k input context window. Naturally, this raises the question - is RAG still necessary? Most small data use cases can fit within a 1-10M context window. Tokens will get cheaper and faster to process over time. There is ongoing debate over this topic.

Long Context LLM with Pain Points Resolved

Pain points resolved:

- Conversional memory is easier to build: 1M-10M context windows will let users more easily implement conversational memory with fewer compression hacks (e.g. vector search or automatic KG construction) while avoiding overflow.

- RAG is easier to tune:

- text split: long-context LLMs enable native chunk sizes to be bigger.

- retrieval: an issue with small-chunk top-k RAG is that while certain questions may be answered over a specific snippet of the document, other questions require deep analysis between sections or between two documents (for instance comparison queries). For these use cases, users will no longer have to rely on a chain-of-thought agent to do two retrievals against a weak retriever; instead, they can just one-shot prompt the LLM to obtain the answer.

Challenges

Context Not Large Enough in Practice

In fact, event 10M tokens is still not enough for large document corpuses.e.g., 1M tokens is around ~7 Uber SEC 10K filings. Many knowledge corpuses in the enterprise are in the gigabytes or terabytes.

Context Stuffing Is Not The Optimal Way to Feed to LLM

Lost in the Middel: The Accuracy Problem with Context Stuffing

A recent study found that LLMs perform better when the relevant information is located at the beginning or end of the input context.

However, when relevant context is in the middle of longer contexts, the retrieval performance is degraded considerably. This is also the case for models specifically designed for long contexts.

Extended-context models are not necessarily better at using input context.

Cost and Latency

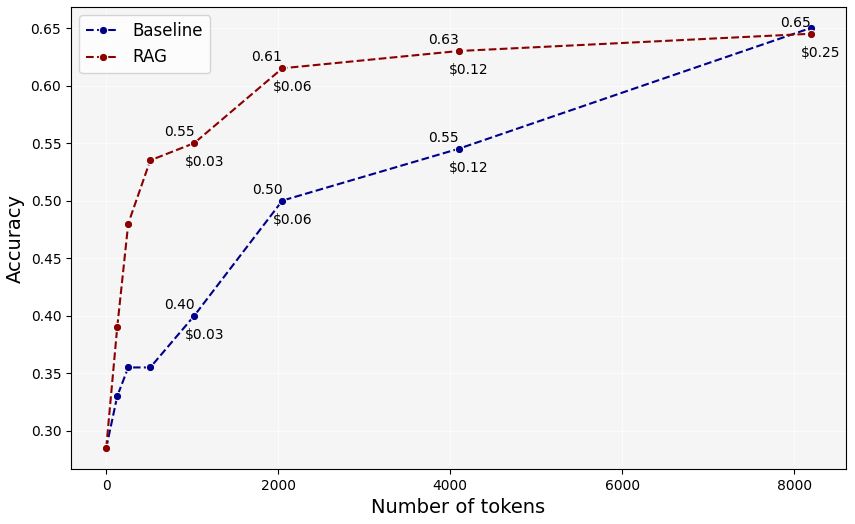

A recent work has compared the performance-per-token of ChatGPT-4 under two different scenarios:

- Using retrieval on document segments (RAG).

- Sequentially processing the document from the beginning, referred to as the baseline.

To conclude, the experiments show that stuffing the context window isn’t the optimal way to provide information for LLMs. Specifically, they show that:

- LLMs tend to struggle in distinguishing valuable information when flooded with large amounts of unfiltered information.

- Using a retrieval system to find and provide narrow, relevant information boosts the models’ efficiency per token, which results in lower resource consumption and improved accuracy.

- The above holds true even when a single large document is put into the context, rather than many documents.

Embedding Model with Smaller Context Length

Embedding models are lagging behind in context length. Most embedding models are BERT/Transformer-based and typically have short context lengths (e.g., 512). That’s only about two pages of text. So far the largest context window for embeddings are 32k from M2-Bert. This means that even if the chunks used for synthesis with long-context LLMs can be big, any text chunks used for retrieval still need to be a lot smaller.

Lost in the Middle, a paper published in 2023, found that models with long context windows do not robustly retrieve information that is buried in the middle of a long prompt. The bigger the context window, the greater the loss of mid-prompt context.